What are Digital Graphics?

This article will aim to give a better understanding of what digital graphics are, and will give basic descriptions to complex technical terms.

There are various different forms of digital graphics, this list includes:

- Pixels – Bit Rate, RGB, Resolution

- Raster and Vector Image Formats

- Bit Depth

- Colour Space

- Image Capture

As well as these, there are various applications of interactive media and how the image looks as a 'finished output'.

Pixels

What is a pixel?

The word pixel is short for PICture ELement, and is the smallest part of a picture. It is essentially a small square that contains colour, which makes up an image; this 'square' is a sample of the original image, as can be seen below:

An image displayed on the monitor is made up of a grid of horizontal and vertical dots called pixels. The number of pixels that can be displayed on the screen at one time is normally called the resolution of the image (or screen) and is expressed as a pair of numbers such as 640x480.

While the pixel is the smallest element of a video image, it is not the smallest element of a monitor's screen. Since each pixel on a color display must be made up of three separate colors, there are in fact smaller red, green and blue dots on the surface make up the image. The term "dot" is used to refer to these small elements that make up the displayed image on the screen.

Depending on the bit depth of a computer monitor (i.e. the size and the resolution of the monitor), anywhere between two to millions of colours can be assigned to each pixel. Each pixel is assigned a ‘tonal’ value (black, white, shades of grey or colour). This pixel is then represented in binary code (zeros and ones), as you can see from the above image, the cross section shows the pixilated ear of the deer – this pixel will have a unique colour set and its own unique binary code.

Bit Rate

What is a bit?

A bit is short for Binary Digit, it is the smallest bit of information on a computer. A single bit can hold either a 0 or a 1 (binary number). More meaningful information is obtained by combining bits into larger units called a Byte.

A single byte is composed of 8 bits, a byte also has larger forms known as:

- Kilobyte : 1,024 bytes

- Megabyte : 1,048,576 bytes

- Gigabyte : 1,073,741,824 bytes

The list goes even higher to what is known as a Yottabyte, five times larger than a gigabyte!

Bit Rate

Bit rate is defined as the ratio of the number of bits that are transferred between devices within a second. This is otherwise known as Data Transfer Rate, the speed that the data is transmitted from one device to another. Data rates are often measured in Megabytes (Mb) per second (Mbps).

RGB

The RGB color model is a type in which red, blue and green light are added together in various ways to reproduce various colours. The name of the model comes from the initials of the colours, as they are the three additive primary colours

The main purpose of the RGB color model is for the display of images in electronic systems, such as televisions and computers, though it has also been used in conventional photography.

The main purpose of the RGB color model is for the display of images in electronic systems, such as televisions and computers, though it has also been used in conventional photography.RGB is what is known as a device-dependent color model: different devices detect or reproduce a given RGB value differently, since the colour elements and their response to the individual R, G, and B levels vary from different manufacturers, or even in the same device over time.

Without some sort of colour management, the different devices do not dedefine the same colour code

There are various input devices that make up the standard RGB setup, these are listed below:

- Colour TV and Video cameras

- Image scanners

- Digital cameras

There are various output devices for the RGB setup, anything with a screen such as the items listed below:

- LCD/Plasma TV

- Computer and Mobile phones displays

- Video projectors

- Multicolour LCD displays

Completely opposite to the RGB colour model, is a model known as CMYK. This is detailed below:

CMYK

Otherwise known as Cyan, Magenta, Yellow and BlacK, it is the process of mixing paints, dyes, inks and natural colours to create a very large range and spectrum of colours. This is done by absorbing the some colours and reflecting others.

How does this work?

In simple terms, the absorbing and reflecting of colour source such as ink or paint happens when they are between the viewer and the light source to absorb some of the light wavelengths to give it a seperate colour.

An example of the type of device to use CMYK model is a colour printer.

Pixel Resolution

PIxel resolution is often used for pixel count in digital imaging, however the international standards and American and Japanese standards have determined that for cameras, this should not be used. For explanation purposes, resolution is determined as

N x M

The first number is the number of pixel columns (width) and the second is the number of pixel rows (height), for example as 640 by 480 is 640 wide by 480 high.

Another way to display the resolution is to determine the total numbe of pixels in the image, otherwise known as the number of megapixel. Megapixels are determined by multiplying the height by the width and dividing by one million. For example, the resolution shown above 640x480 would be 0.3megapixels.

Below is an illustration of how the same image might appear at different pixel resolutions, if the pixels were rendered as sharp squares.

Raster Image format

A raster image, also know as a bitmap image, is a way to represent digital images. The raster image takes a wide variety of formats, including the familiar .gif, .jpg, and .bmp. It represents an image in a series of bits of information which translate into pixels on the screen. These pixels form points of colour which create an overall finished image.

When this type of image is created, the image on the screen is converted into pixels. Each pixel is assigned a specific value which determines its colour. The raster image system uses the red, green, blue (RGB) colour system. An RGB value of 0,0,0 would be black, and the values go all the way through to 256 for each colour, allowing the expression of a wide range of colour values. In photographs with subtle shading, this can be extremely valuable.

Below is an example of giving an image an RGB value for each pixel

When a raster image is viewed, the pixels usually smooth out visually for the user, who sees a photograph or drawing. When blown up, the pixels in a raster image become apparent. While this effect is sometimes a deliberate choice on the part of an artist, it is usually not desired. Depending on resolution, some raster images can be enlarged to very large sizes, while others quickly become difficult to see. The smaller the resolution, the smaller the digital image file. For this reason, people who work with computer graphics must find a balance between resolution and image size.

When a raster image is viewed, the pixels usually smooth out visually for the user, who sees a photograph or drawing. When blown up, the pixels in a raster image become apparent. While this effect is sometimes a deliberate choice on the part of an artist, it is usually not desired. Depending on resolution, some raster images can be enlarged to very large sizes, while others quickly become difficult to see. The smaller the resolution, the smaller the digital image file. For this reason, people who work with computer graphics must find a balance between resolution and image size. Resolution refers to the number of pixels per inch (PPI) or dots per inch (DPI) in the image. The higher the resolution, the greater the number of pixels, allowing for a greater range of colour which will translate better as the image is enlarged. However, the more pixels, the more individual points of data to be stored, so the bigger the file size. For high quality photography, a high DPI is preferred because the images will look more appealing to the viewer. For small images which do not need to be blown up, or when quality is not important, a low DPI can be used.

Raster graphics are resolution dependent. They cannot scale up to a higer resolution without loss of quality. This property contrasts with the capabilities of vector graphics (these will be explained in further detail below), which easily scale up to the quality of the device rendering them. Raster graphics deal more practically than vector graphics with photographs and photo-realistic images, while vector graphics often serve better for typesetting or for graphic design. Modern computer-monitors typically display about 72 to 130 pixels per inch (PPI), and some modern printers can print 2400 dots per inch (DPI) or more; determining the most appropriate image resolution for a given printer-resolution can pose difficulties, since printed output may have a greater level of detail than a viewer can discern on a monitor. Typically, a resolution of 150 to 300 pixel per inch works well for 4-color process (CMYK) printing.

File Compression

There are two major forms of data compression, Lossy and Lossless Compression.

Lossy Compression

A compression technique that does not decompress data back to 100% of the original. Lossy methods provide high degrees of compression and result in very small compressed files, but there is a certain amount of loss when they are restored.

Audio, video and some imaging applications can tolerate loss, and in many cases, it may not be noticeable to the human ear or eye. In other cases, it may be noticeable, but not that critical to the application. The more tolerance for loss, the smaller the file can be compressed, and the faster the file can be transmitted over a network. Examples of lossy file formats are MP3, AAC, MPEG and JPEG.

Lossless Compression

A compression technique that decompresses data back to its original form without any loss. The decompressed file and the original are identical. All compression methods used to compress text, databases and other business data are lossless. For example, the ZIP archiving technology (PKZIP, WinZip, etc.) is a widely used lossless method.

Below is a very simple image showing the various types of compression, and the effect it has when it is restored

There are various file types that are considered to be in Raster Format, these are shown below:

- .bmp - This is an uncompressed raster image comprised of a rectangular grid of pixels; contains a file header (bitmap identifier, file size, width, height, colour options, and bitmap data starting point) and bitmap pixels, each with a different color. They can support bit rates of up to 32bit.

these types of files may contain different levels of color depths per pixel, depending on the number of bits per pixel specified in the file header. They may also be stored using a grayscale colour scheme (explained in further detail later)

- .jpg - This is a compressed image format standardized by the Joint Photographic Experts Group (JPEG); commonly used for storing digital photos since the format supports up to 24-bit colour; therefore, most digital cameras save images as JPG files by default.

JPEG is also a common format for publishing Web graphics since the JPEG compression algorithm significantly reduces the file size of images. However, the lossy compression used by JPEG may noticeably reduce the image quality if high amounts of compression are used.

- .gif - These are image files that may contain up to 256 indexed colors; color palette may be a predefined set of colours or may be adapted to the colors in the image; lossless format, meaning the clarity of the image is not compromised with GIF compression.

GIFs are common format for web graphics, especially small images and images that contain text, such as navigation buttons. However, JPEG (.JPG) images are better for showing photos because they are not limited in the number of colours they can display.

GIF images can also be animated and saved as "animated GIFs" which are often used to display basic animations on websites, and may also include transparent pixels, which allow them to blend with different colour backgrounds. However, pixels in a GIF image must be either fully transparent or fully opaque, so the transparency cannot be faded like a .PNG image.

- .png - Image file stored in the Portable Network Graphic (PNG) format; like a .GIF file, contains a bitmap of indexed colours under a lossless compression, but without copyright limitations; commonly used to store graphics for Web images.

The PNG format was created in response to limitations with the GIF format, primarily to increase colour support and to provide an image format without a patent license. Additionally, while GIF images only support fully opaque or fully transparent pixels, PNG images may include an 8-bit transparency channel, which allows the image colors to fade from opaque to transparent.

PNG images cannot be animated like GIF images. However, the related .MNG format can be animated. PNG images do not provide CMYK colour support because they are not intended for use with professional graphics. PNG images are now supported by most Web browsers.

- tiff - This a high-quality graphics format often used for storing images with many colors, such as digital photos; short for "TIFF;" includes support for layers and multiple pages.

TIFF files can be saved in an uncompressed (lossless) format or may incorporate .JPEG (lossy) compression. They may also use LZW lossless compression, which reduces the TIFF file size, but does not reduce the quality of the image.

Vector Image Format

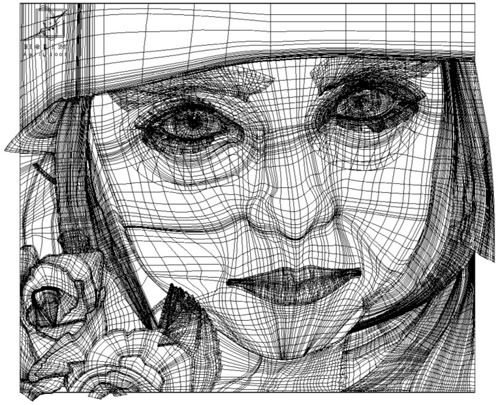

Vector images are are a collection of individual objects rather than picture elements. A vector image can be composed of points connected by lines, curves and shapes with editable attributes such as colour, fill and outline, all of which are individually scalable, the image to the left shows the basics of how a picture would look with just the lines, curves and shapes and no fill colour

Vector images are are a collection of individual objects rather than picture elements. A vector image can be composed of points connected by lines, curves and shapes with editable attributes such as colour, fill and outline, all of which are individually scalable, the image to the left shows the basics of how a picture would look with just the lines, curves and shapes and no fill colourEach individual object contained in a vector image is defined by a mathematical equation. This means that vector images are not resolution dependent, so each vector object is scalable and can generally be resized without any loss of image quality. This makes vector images ideal for graphics such as maps and company logos that often require scaling. However, when the sizes of some vector images are reduced too drastically, fine lines may disappear. Too much enlargement can also make drawing mistakes apparent.

Vector images have no white pixel backgrounds. This means that you can put a vector object above another without blocking out the object underneath.

Vector images typically have small file sizes because one such file contains only the mathematical equations that determine the Bezier curves that define the image. A vector image file does not contain the image itself.

Although they are highly defined,vector images are not appropriate for use in producing realistic-looking photos and images. There have been improvements to vector drawing software which enables users to approximate photorealistic vector objects. Attributes such as transparency can now be adjusted using vector imaging tools. Bitmap textures can now also be applied to vector objects. Shading and blending are also some of the new vector imaging tools' functionalities. Still, most vector images look like sharply drawn cartoon images as can be seen from the below comparison

Bit Depth

Bit depth is essentially how many unique colours are available in an image's colour palette in terms of the number of 0's and 1's, or "bits," which are used to specify each colour. This does not mean that the image necessarily uses all of these colours, but that it can instead specify colours with that level of precision. Images with higher bit depths can have more shades or colours since there are more combinations of 0's and 1's available.

Every colour pixel in a digital image is created through some combination of the three primary colours: red, green, and blue. Each primary colour is often referred to as a "colour channel" and can have any range of intensity values specified by its bit depth. The bit depth for each primary colour is termed the "bits per channel." The "bits per pixel" (bpp) refers to the sum of the bits in all three colour channels and represents the total colours available at each pixel.

Most colour images from digital cameras have 8-bits per channel and so they can use a total of eight 0's and 1's. This allows for 256 different combinations—translating into 256 different intensity values for each primary colour. When all three primary colours are combined at each pixel, this allows for as many as 16.7million different colours, or "true colour." This is referred to as 24 bits per pixel since each pixel is composed of three 8-bit colour channels(3x8=24). Below is a comparison table, and the common names used for each value

Bit depth visualisation

There are no obvious differences between 16 and 24 bit depth as you can see from the comparison below

16 bit depth

However when compared to an 8bit image, the difference in tone and colour is very apparant

Colour Space

A Grayscale digital image is an image in which the value of each pixel is a single sample.Images of this sort, also known as black-and-white, are composed exclusively of shades of gray, varying from black at the weakest intensity to white at the strongest.

Grayscale images are distinct from one-bit black-and-white images, which in the context of computer imaging are images with only the two colours, black, and white (also called bilevel or binary images). Grayscale images have many shades of gray in between. Grayscale images are also called monochromatic, denoting the absence of any chromatic variation.

In computing, although the grayscale can be computed through rational numbers, image pixels are stored in binary, Some early grayscale monitors can only show up to sixteen (4-bit) different shades, but today grayscale images (such as photographs) intended for visual display (both on screen and printed) are commonly stored with 8 bits per sampled pixel, which allows 256 different intensities (i.e., shades of gray) to be recorded, typically on a non-linear scale. The precision provided by this format is very convenient for programming due to the fact that a single pixel then occupies a single byte.

No matter what pixel depth is used, the binary representations assume that 0 is black and the maximum value (255 at 8 bpp, 65,535 at 16 bpp, etc.) is white, if not otherwise noted.

Colour images are often built of several stacked colour channels, each of them representing value levels of the given channel. For example, RGB images are composed of three independent channels for red, green and blue primary colour components; CMYK images have four channels for cyan, magenta, yellow and black ink plates, etc.

To the left is an example of color channel splitting of a full RGB color image. The column at left shows the isolated color channels in natural colours, while at right there are their grayscale equivalences:

YUV ( Luminance and Chrominance)

Vector images typically have small file sizes because one such file contains only the mathematical equations that determine the Bezier curves that define the image. A vector image file does not contain the image itself.

Although they are highly defined,vector images are not appropriate for use in producing realistic-looking photos and images. There have been improvements to vector drawing software which enables users to approximate photorealistic vector objects. Attributes such as transparency can now be adjusted using vector imaging tools. Bitmap textures can now also be applied to vector objects. Shading and blending are also some of the new vector imaging tools' functionalities. Still, most vector images look like sharply drawn cartoon images as can be seen from the below comparison

Bit Depth

Bit depth is essentially how many unique colours are available in an image's colour palette in terms of the number of 0's and 1's, or "bits," which are used to specify each colour. This does not mean that the image necessarily uses all of these colours, but that it can instead specify colours with that level of precision. Images with higher bit depths can have more shades or colours since there are more combinations of 0's and 1's available.

Every colour pixel in a digital image is created through some combination of the three primary colours: red, green, and blue. Each primary colour is often referred to as a "colour channel" and can have any range of intensity values specified by its bit depth. The bit depth for each primary colour is termed the "bits per channel." The "bits per pixel" (bpp) refers to the sum of the bits in all three colour channels and represents the total colours available at each pixel.

Most colour images from digital cameras have 8-bits per channel and so they can use a total of eight 0's and 1's. This allows for 256 different combinations—translating into 256 different intensity values for each primary colour. When all three primary colours are combined at each pixel, this allows for as many as 16.7million different colours, or "true colour." This is referred to as 24 bits per pixel since each pixel is composed of three 8-bit colour channels(3x8=24). Below is a comparison table, and the common names used for each value

| Bits Per Pixel | Number of Colors Available | Common Name(s) |

|---|---|---|

| 1 | 2 | Monochrome |

| 2 | 4 | CGA |

| 4 | 16 | EGA |

| 8 | 256 | VGA |

| 16 | 65536 | XGA, High Colour |

| 24 | 16777216 | SVGA, True Colour |

| 32 | 16777216 + Transparency | |

| 48 | 281 Trillion |

Bit depth visualisation

There are no obvious differences between 16 and 24 bit depth as you can see from the comparison below

16 bit depth

However when compared to an 8bit image, the difference in tone and colour is very apparant

Colour Space

Grayscale

What is Grayscale?

A Grayscale digital image is an image in which the value of each pixel is a single sample.Images of this sort, also known as black-and-white, are composed exclusively of shades of gray, varying from black at the weakest intensity to white at the strongest.

Grayscale images are distinct from one-bit black-and-white images, which in the context of computer imaging are images with only the two colours, black, and white (also called bilevel or binary images). Grayscale images have many shades of gray in between. Grayscale images are also called monochromatic, denoting the absence of any chromatic variation.

In computing, although the grayscale can be computed through rational numbers, image pixels are stored in binary, Some early grayscale monitors can only show up to sixteen (4-bit) different shades, but today grayscale images (such as photographs) intended for visual display (both on screen and printed) are commonly stored with 8 bits per sampled pixel, which allows 256 different intensities (i.e., shades of gray) to be recorded, typically on a non-linear scale. The precision provided by this format is very convenient for programming due to the fact that a single pixel then occupies a single byte.

No matter what pixel depth is used, the binary representations assume that 0 is black and the maximum value (255 at 8 bpp, 65,535 at 16 bpp, etc.) is white, if not otherwise noted.

Colour images are often built of several stacked colour channels, each of them representing value levels of the given channel. For example, RGB images are composed of three independent channels for red, green and blue primary colour components; CMYK images have four channels for cyan, magenta, yellow and black ink plates, etc.

To the left is an example of color channel splitting of a full RGB color image. The column at left shows the isolated color channels in natural colours, while at right there are their grayscale equivalences:

YUV ( Luminance and Chrominance)

YUV colourspace is very strange in the senes that the Y component determines the brightness of the color (referred to as luminance or luma), while the U and V components determine the color itself (the chroma). Y ranges from 0 to 1 (or 0 to 255 in digital formats), while U and V range from -0.5 to 0.5 (or -128 to 127 in signed digital form, or 0 to 255 in unsigned form). Some standards further limit the ranges so the out-of-bounds values indicate special information like synchronization.

One interesting aspect of YUV is that you can throw out the U and V components and get a grey-scale image. Since the human eye is more responsive to brightness than it is to colour, many lossy image compression formats throw away half or more of the samples in the chroma channels to reduce the amount of data to deal with, without severely destroying the image quality.

Comparing YUV to the RGB colour model, the full colour image on the left is separated into a Red channel, a Green Channel, and a Blue channel, which in itself is pretty simple. However you can see that the YUV provides essentially a “grayscale” image from one channel, and the colour information is separated out into 2 channels – one for Blue information minus the brightness, and one for Red info minus the brightness. In this image, the Blue channel is showing purple/yellow colours, and the Red channel has more red/cyan colors in this particular image.

Comparing YUV to the RGB colour model, the full colour image on the left is separated into a Red channel, a Green Channel, and a Blue channel, which in itself is pretty simple. However you can see that the YUV provides essentially a “grayscale” image from one channel, and the colour information is separated out into 2 channels – one for Blue information minus the brightness, and one for Red info minus the brightness. In this image, the Blue channel is showing purple/yellow colours, and the Red channel has more red/cyan colors in this particular image. Image Capture

There are many forms of image capture available to users, such as Scanners and digital cameras. However, there are other items such as data storage that can also be classed as a form of capture. Below is a brief explanation of each form of capture available, and their various applications.

Scanners

The basic principle of a scanner is to analyze an image and process it in some way. Image and text capture (optical character recognition or OCR) allow you to save information to a file on your computer. You can then alter or enhance the image, print it out or use it on a web page.

The main component of the scanner is what is known as a Charged Couple Device (CCD). A CCD is a collection of tiny light-sensitive diodes (micro circuits that channel electric current), which convert photons (light) into electrons (electrical charge). These diodes are called photosites.

In a nutshell, each photosite is sensitive to light -- the brighter the light that hits a single photosite, the greater the electrical charge that will accumulate at that site.

In a nutshell, each photosite is sensitive to light -- the brighter the light that hits a single photosite, the greater the electrical charge that will accumulate at that site.An example of a CCD in a scanner can be seen to the right.

When a document is placed into the scanner and the scannign begins, a lamp is used to illuminate the document. The lamp in newer scanners is either a cold cathode fluorescent lamp (CCFL) or a xenon lamp.

The image of the document is reflected by an angled mirror to another mirror. In some scanners, there are only two mirrors while others use a three mirror approach. Each mirror is slightly curved to focus the image it reflects onto a smaller surface.

The last mirror reflects the image onto a lens . This lens then focuses the image through a filter on the CCD array and splits the image into three smaller versions of the original. Each smaller version passes through a colour filter (either red, green or blue) onto a discrete section of the CCD array. The scanner combines the data from the three parts of the CCD array into a single full colour image.

Scanners vary in resolution and sharpness. Most flatbed scanners have a true hardware resolution of at least 300x300 dots per inch (dpi). The scanner's dpi is determined by the number of sensors in a single row of the CCD array by the precision of the stepper motor.

Sharpness depends mainly on the quality of the optics used to make the lens and the brightness of the light source. A bright xenon lamp and high-quality lens will create a much clearer, and therefore sharper, image than a standard fluorescent lamp and basic lens.

The bit depth of a scanner refers to the number of colours that the scanner is capable of reproducing. Each pixel requires 24 bits to create standard true colour and virtually all scanners on the market support this. Many of them offer bit depths of 30 or 36 bits. They still only output in 24-bit color, but perform internal processing to select the best possible choice out of the colours available in the increased palette. There are many opinions about whether there is a noticeable difference in quality between 24-, 30- and 36-bit scanners.

Digital Camera

Essentially, a digital image is just a long string of 1s and 0s that represent all the tiny coloured dots or pixels that collectively make up the image.

If you want to get a picture into this form, you have two options:

The image sensor employed by most digital cameras is a charge couple device (CCD) as explained in the above section regarding scanners.However, some cameras use Complementary Metal Oxide Semiconductor (CMOS) technology instead. Both CCD and CMOS image sensors convert light into electrons. To the right is an example CMOS image sensor, and below is an example of a digital camera CCD sensor.

The image sensor employed by most digital cameras is a charge couple device (CCD) as explained in the above section regarding scanners.However, some cameras use Complementary Metal Oxide Semiconductor (CMOS) technology instead. Both CCD and CMOS image sensors convert light into electrons. To the right is an example CMOS image sensor, and below is an example of a digital camera CCD sensor.

Once the sensor converts the light into electrons, it reads the value (accumulated charge) of each cell in the image. This is where the differences between the two main sensor types kick in:

The amount of detail that the camera can capture is called the resolution and it is measured in pixels. The more pixels a camera has, the more detail it can capture and the larger pictures can be without becoming blurry or "grainy."

Some typical resolutions include:

There are several ways of recording the three colours in a digital camera. The highest quality cameras use three separate sensors, each with a different filter. A beam splitter directs light to the different sensors. Think of the light entering the camera as water flowing through a pipe. Using a beam splitter would be like dividing an identical amount of water into three different pipes. Each sensor gets an identical look at the image; but because of the filters, each sensor only responds to one of the primary colours.

Its all well and good to be able to capture the image and create various graphics, however all of these need to be stored somewhere, the following section will detail how images are stored, and how the various compression and different formats affect the quality of the images.

Sharpness depends mainly on the quality of the optics used to make the lens and the brightness of the light source. A bright xenon lamp and high-quality lens will create a much clearer, and therefore sharper, image than a standard fluorescent lamp and basic lens.

The bit depth of a scanner refers to the number of colours that the scanner is capable of reproducing. Each pixel requires 24 bits to create standard true colour and virtually all scanners on the market support this. Many of them offer bit depths of 30 or 36 bits. They still only output in 24-bit color, but perform internal processing to select the best possible choice out of the colours available in the increased palette. There are many opinions about whether there is a noticeable difference in quality between 24-, 30- and 36-bit scanners.

Digital Camera

Essentially, a digital image is just a long string of 1s and 0s that represent all the tiny coloured dots or pixels that collectively make up the image.

If you want to get a picture into this form, you have two options:

- You can take a photograph using a conventional film camera, process the film chemically, print it onto photographic paper and then use a digital scanner to sample the print (record the pattern of light as a series of pixel values).

- You can directly sample the original light that bounces off your subject, immediately breaking that light pattern down into a series of pixel values -- in other words, you can use a digital camera.

The image sensor employed by most digital cameras is a charge couple device (CCD) as explained in the above section regarding scanners.However, some cameras use Complementary Metal Oxide Semiconductor (CMOS) technology instead. Both CCD and CMOS image sensors convert light into electrons. To the right is an example CMOS image sensor, and below is an example of a digital camera CCD sensor.

The image sensor employed by most digital cameras is a charge couple device (CCD) as explained in the above section regarding scanners.However, some cameras use Complementary Metal Oxide Semiconductor (CMOS) technology instead. Both CCD and CMOS image sensors convert light into electrons. To the right is an example CMOS image sensor, and below is an example of a digital camera CCD sensor.

Once the sensor converts the light into electrons, it reads the value (accumulated charge) of each cell in the image. This is where the differences between the two main sensor types kick in:

- A CCD transports the charge across the chip and reads it at one corner of the array. An analog-to-digital converter (ADC) then turns each pixel's value into a digital value by measuring the amount of charge at each photosite and converting that measurement to binary form.

- CMOS devices use several transistors at each pixel to amplify and move the charge using more traditional wires.

- CCD sensors create high-quality, low-noise images. CMOS sensors are generally more susceptible to noise. (light noise is where picture may appear to be of a 'grainy' texture, as shown in the below picture)

- Because each pixel on a CMOS sensor has several transistors located next to it, the light sensitivity of a CMOS chip is lower. Many of the photons hit the transistors instead of the photodiode.

- CMOS sensors traditionally consume little power. CCDs, on the other hand, use a process that consumes lots of power. CCDs consume as much as 100 times more power than an equivalent CMOS sensor.

- CCD sensors have been mass produced for a longer period of time, so they are more mature. They tend to have higher quality pixels, and more of them.

Camera Image Resolution

The amount of detail that the camera can capture is called the resolution and it is measured in pixels. The more pixels a camera has, the more detail it can capture and the larger pictures can be without becoming blurry or "grainy."

Some typical resolutions include:

- 256x256 - Found on very cheap cameras, this resolution is so low that the picture quality is almost always unacceptable. This is 65,000 total pixels.

- 640x480 - This is the low end on most "real" cameras. This resolution is ideal for e-mailing pictures or posting pictures on a Web site.

- 1216x912 - This is a "megapixel" image size -- 1,109,000 total pixels -- good for printing pictures.

- 1600x1200 - With almost 2 million total pixels, this is "high resolution." You can print a 4x5 inch print taken at this resolution with the same quality that you would get from a photo lab.

- 2240x1680 - Found on 4 megapixel cameras -- the current standard -- this allows even larger printed photos, with good quality for prints up to 16x20 inches.

- 4064x2704 - A top-of-the-line digital camera with 11.1 megapixels takes pictures at this resolution. At this setting, you can create 13.5x9 inch prints with no loss of picture quality.

Unfortunately, each photosite is colourblind, it only keeps track of the total intensity of the light that strikes its surface. In order to get a full colour image, most sensors use filtering to look at the light in its three primary colours. Once the camera records all three colours, it combines them to create the full spectrum.

Image Storage

Its all well and good to be able to capture the image and create various graphics, however all of these need to be stored somewhere, the following section will detail how images are stored, and how the various compression and different formats affect the quality of the images.

A digital image’s file size is mostly determined by the digital image’s resolution and the digital image format. Other factors that can influence the file size, depending on the chosen digital image format, is the number of colour channels per pixel, compression level, the digital camera’s settings and the complexity of the scene being photographed. The last three factors are applying only when using the JPEG format. The most important rule to remember is that higher image resolution, more megapixel, leads to larger file size.

Determining an image’s file size in advance for the TIFF and RAW format is relatively easy. But doing that for a JPEG image is impossible. It is also impossible to have the same file size for all images since each image is unique when shooting in JPEG mode.

Determining an image’s file size in advance for the TIFF and RAW format is relatively easy. But doing that for a JPEG image is impossible. It is also impossible to have the same file size for all images since each image is unique when shooting in JPEG mode.

The end.............for now!

I hope this blog has been useful to you in helping you to understand digital technology! Check back in next week for a blog on Video in Interactive Media! Aiming to arm you with all the knowledge you need to understand the various platforms and formats, video compression and frame rates and applications of interactive media.

Take care and see you all next week!

Well done Alistair you have comprehensively explained the theory and applications of digital graphics technology. You have supported your argument with examples that elucidate the points you make and have illustrated clearly the breadth of technologies used in digital graphics. You provide a high quality of expression and your technical vocabulary is secure and used correctly and confidently at all times.

ReplyDeleteA very good and informative article indeed. It helps me a lot to enhance my knowledge, I really like the way the writer presented his views.

ReplyDeletequantum entanglement